This is my last day as Editor in Chief of JGR Space Physics, and so the last day of me writing this blog on being EiC of this journal. This is also post #300; I made it to this conveniently round yet arbitrary number of articles on this site. Over my six years in this role, that’s just about one post per week, which is what I was hoping for when I started it. So, yay for me, I maintained my average and achieved my goal. I occasionally took a month off (often January) or, once, even longer, but as this is an extra thing that I tacked on to my EiC duties, I promised myself to never apologize for taking a break from it. I didn’t then and I still won’t.

Overall, writing this blog has been a very positive experience for me. The response from the community has been overwhelmingly supportive and so I kept it going. In thinking back, I only received one complaint, just after my first SPA newsletter announcement about the existence of this blog. The person lamented that I was creating starting yet another place for announcements to the community and that I should instead just go through the normal channel of the SPA newsletter. This person failed to see the irony of their comment – they only knew about my blog because I submitted a “blog highlights” announcement to the SPA newsletter! This person has not been a corresponding author on any manuscripts in the journal throughout my term as EiC, though, so perhaps my blog is not for them.

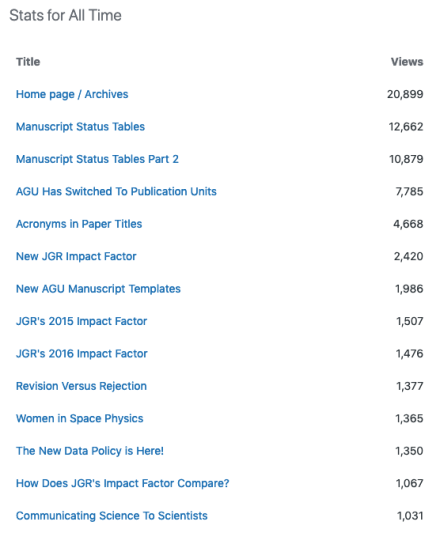

I knew that it would not be for everyone. It is for those that want to know the latest on publication policy and common practices. Those that really want to see the posts as they appear have subscribed to new content alerts (there are about 60 of you) and the rest of the community is reminded of this blog’s existence with my monthly SPA newsletter blurbs. That’s worked out pretty well, accumulating just over 122,000 page views. That’s ~1700 views per month over the 6-year timeline, which I’ll take as a big win for increased communication and transparency.

Those were my main reasons for starting this blog and writing all of the posts – communication and transparency. I had ~90 posts that first year, having a lot of topics regarding AGU publications about which the community was inquiring and that I was discovering through my insider EiC role. Since then, I have averaged ~40 each year, enough to keep your attention (I hope) without boring you with minutiae (I hope). Like a journal article, a blog can also have a least publishable unit. I wanted to stay above that threshold and so I just didn’t post anything when I didn’t have something I deemed important enough to write about. Three hundred posts at ~500 words each is a book, and I might convert these posts into a bound volume in 2020.

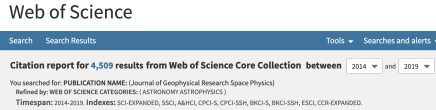

I’d like to extend a huge thank you to all of you that have written or talked to me over the years offering suggestions and ideas for blog posts. This has been a tremendous help for gauging relevance in what to write about here. Some of these suggestions turned into discussions with AGU HQ staff, advocating for changes in publication policy. I am particularly proud of the role that the space physics community played in removing preprint servers as dual publication, as documented in a series of posts in late 2014. You not only changed the policy but AGU has fully embraced preprint servers as a means of increased scientific communication, to the point that it spearheaded the creation of ESSOAr, a preprint server just for our field.

I occasionally went off of the topic of publication policy and journal news. This usually was to voice my support for combating sexism and other bias in the scientific workplace. I think my first post on this was my November 2014 recap of Dana Hurley’s Eos article, Women Count. I’d say that this culminated in my June 2016 post about my grad student and sexist microaggressions, with several others that same summer on the topic. I’ve continued this theme ever since, with occasional posts on how diverse teams lead to better solutions to science problems (race, gender, nationality…the more diversity the better) and how we should fight bias in our interactions with each other, including some on being cordial and gender neutral in our publication-related correspondence. So, yes, I tied it back to publications, a bit. As long as I had this platform to the community, I wanted to use it for a cause I feel passionately about and wanted to promote. Thanks, Dana, for your Eos article that spurred me towards this series of posts.

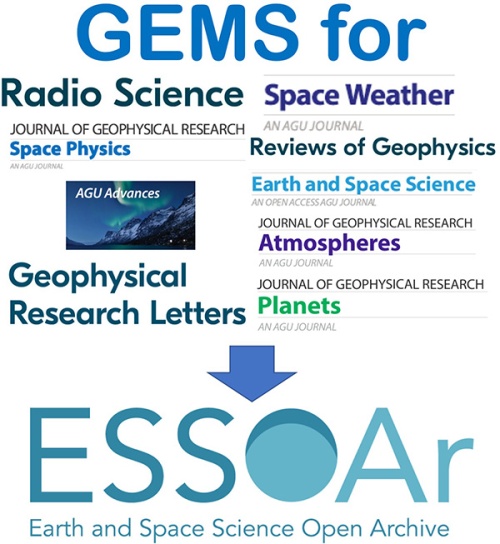

Oh, another thing to say to everyone: don’t choose the outgoing editors when submitting a manuscript to JGR Space Physics. Today is my last day as EiC, but I will still be listed as an editor while the papers that I assigned to myself make their way towards final accept-or-reject decisions. Starting tomorrow, though, when Michael Balikhin is EiC, he will only have 3 editors to whom he can assign new manuscripts: Viviane Pierrard, Natalia Ganushkina, and himself. The other 4 editors – Yuming Wang, Larry Kepko, Alan Rodger, and me – will no longer be taking new manuscript assignments. Because we will still be editors for a few more months, our names will still appear in the list under the “select your editor preference” pull-down menu in GEMS. Please, don’t bother picking us, as Dr. Balikhin will not be assigning any new papers to us.

Which reminds me: the search for new editors for JGR Space Physics is still accepting applications. There are several positions open, Dr. Balikhin expects to appoint two to four new editors. I have heard that there are several applications are already submitted but the mid-January deadline has not yet passed. It is a large service role but, I think, worth the time commitment. Please consider it as a way to give back to the space physics community.

Thanks for letting me ramble on here. I am glad that I did this blog.

I’ll have at least one more Editors’ Vox article, my thank you to everyone as I pass the mantle to Dr. Balikhin, and maybe another Editor’s Highlight or two from the remaining manuscript assignments in my GEMS workflow. Also, I plan to start a new blog with my large service role that I have taken on here at U-M – heading up the University of Michigan Space Institute. I hope to be doing regular blog posts there, starting in January.

Work hard and be nice! Cheers.